Aaron Giles

Programming War Stories

Microsoft

After the Connectix engineering staff was snapped up by Microsoft, I moved from the San Francisco Bay Area to the Pacific Northwest and started a new life.

There was no time to waste once we got there, so we started right away on rebranding Virtual PC for Windows as a Microsoft product, and followed that up with a rebrand of Virtual Server as well. Then it was on to making new stuff, so I ended up co-leading the effort to create Microsoft’s own virtualization solution, Hyper-V.

I then decided to ditch the whole management gig and got involved with efforts to port Windows NT to the ARM platform, which involved an exciting switch to an entirely new ISA in mid-stream. I followed that up by taking on the task of porting Windows yet again, this time to ARM’s new 64-bit architecture. After doing the initial bringup, I helped out the Cobalt (x86-to-ARM jitting) project and the Chakra (JavaScript compiler) projects.

Microsoft Virtual PC 2004

When we developed Virtual PC for Windows at Connectix, we designed it for speed, and the best way to make it scream was to take complete and utter control of the entire machine for brief periods of time without the underlying OS knowing about it.

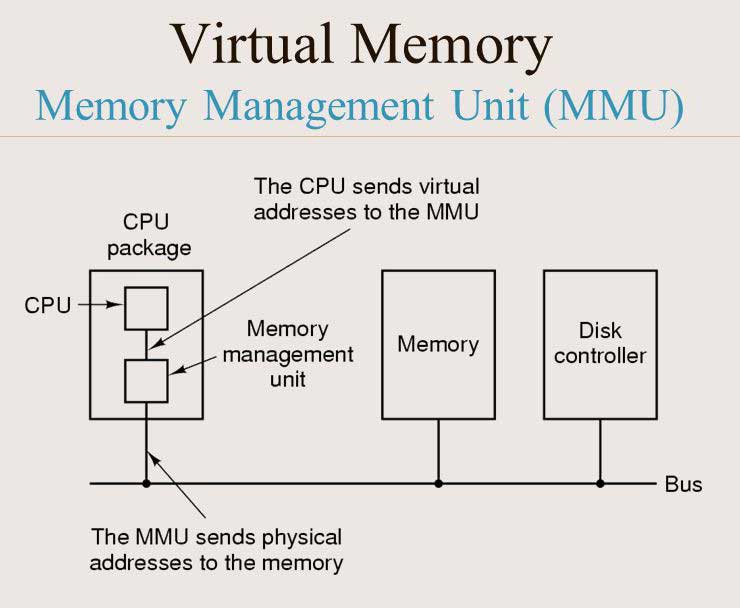

The reason this got us great performance was that we could directly leverage the MMU on the processor to manage memory. Without that, every single memory access would involve verifying permissions and mappings, and that would have crippled performance.

In order to begin taking over the machine, we first had to gain access to kernel mode. To do this, we just identified an unused interrupt vector in the OS’s interupt vector table, and patched it to point to our own code—which lived in user mode. (Security experts are rightly freaking out at reading this.)

Once in control, we would run as long as we could, until an interrupt was signaled. When that happened, we would immediately put the OS’s page tables back and then hop to the OS’s interrupt handling code, pretending like nothing at all had happened.

A good analogy is if, right after leaving for work, someone breaks into your house, rearranges your furniture, and hangs out. Then, when they see you pull up in the driveway, they put everything back exactly the way it was and slip out the window, with you none the wiser.

Of course, the other thing that was happening around the time of our purchase was the release of Windows XP Service Pack 2, which was the big security-focused version of Windows XP. All code had to go through a security audit, especially code from outsiders.

Thus we were told that we needed to come up with a clean and proper way to do this switch, which involved installing a driver that could be called with proper permission checking by the OS.

Apart from security improvements, the rest of our efforts in this release went toward rebranding and minor tweaks. All told, it took a little more than a year to complete the work, and then it was time to move on to the real reason Microsoft bought Connectix: creating the next generation.

Building Hyper-V

By the time we began work on Hyper-V, VMWare had already made significant inroads in defining and dominating the x86 virtualization market. Microsoft wanted to make virtualization a key part of Windows Server 2008, and the Connectix engineers were at the heart of this effort.

One advantage of starting late is that both Intel and AMD were in the process of introducing virtualization extensions that formalized virtual machine support in the processor. (We eventually retrofitted that support into later versions of Virtual PC as well.)

The effort to develop Hyper-V was big enough that we split it into three parts: one team handled the hypervisor, one team handled VMBus and virtual devices (like networking and storage), and I led up the team that handled the rest, including the user-facing parts of the product.

Even within my third of the project, we were dealing with a very broad collection of components, including:

- The VID (Virtual Infrastructure Driver) that talked directly to the hypervisor to control virtual machines at the lowest level.

- The VMWP (Virtual Machine Worker Process), which served as a proxy for each running virtual machine running on the system.

- The VMMS (Virtualization Machine Management Service) which hosted WMI classes that enabled the creation and management of machines from the outside world.

- The Virtual Machine Manager MMC snap-in that provided a basic user interface for managing machines. (The expectation was that there would be more fully-featured managers developed later down the road.)

Over the course of the project, my team grew from around 6 to 12 people, and I ended up not actually writing very much code, as managing a team that size required all my attention.

As a result, after the successful launch of Hyper-V as part of Windows Server 2008, I decided to take a break from management and became an individual contributor once again. This meant finding something fun to work on.

Unfortunately, these things don’t always happen quickly. I killed time working on a couple of related projects, including system support for mounting VHD images that shipped in Windows 7. And then I found what was probably the perfect project for me.

Windows on ARM

It was in 2009 when I first heard of a small incubation effort to get Windows up and running on ARM processors. At the time I encountered it, the project was being driven by one person, who actually already had the OS booting with little official help.

But the time was right for official ARM support to become important. The dawn of multicore mobile processors was here, and Windows CE’s lack of MP support was beginning to put Microsoft at an even greater disadvantage.

Since ARM processors were getting more powerful all the time, and since NT was designed from the start to support running with multiple processors, it seemed inevitable that someone would make it an official ask soon, so why not start now?

This project, it turned out, was absolutely perfect for my skills. It involved both porting (which I had done at LucasArts and in my MAME work) and bare metal programming (which I had done throughout my career dating back to my early games).

One of the first things we did was to define the runtime environment requirements. At a minimum, we chose to standardize on modern ARMv7 processors, and to require both hardware floating-point support (VFP) and SIMD support (NEON).

Doing this meant we could ignore a lot of backwards compatibility issues and focus on just supporting the high-end fully-featured processors like the ARM Cortex A9/A15 and the Qualcomm Snapdragon class of CPUs.

Switching To a New ISA

Another controversial call we made was to fully embrace the Thumb-2 ISA. Since there was no legacy code running on these systems, there was no need to support two ISAs (both A32 and Thumb), so we standardized on the more compact form throughout the OS.

This ISA decision meant our compiler division needed to create new versions of the compiler optimized for Thumb-2, and also needed to support the ARM form of the NT exception unwinding model, which I ended up designing.

A lot of the work I did involved digging into other peoples’ code and removing bad assumptions. Over the course of its history, Windows NT has actually run on a lot of different CPU architectures: MIPS, Alpha, PowerPC, IA64 (aka Itanium), and both x86 and x64.

But over time, all except for the Intel-based ones (IA64, x86, and x64) had been dropped, and even IA64 was on the way out. So a certain myopia had inevitably creeped into some of the code, and we had to help educate people once again about RISC platforms like ARM.

All in all, it was very rewarding getting the full version of Windows up and running on a new platform. We actually had pretty much everything running smoothly before the concept of Windows RT was introduced, which put some artificial limitations into the system.

Eventually, Windows RT shipped on the Microsoft Surface and Surface 2 tablets. Shortly thereafter Windows Phone 8 shipped, using the Windows NT as its foundation instead of Windows CE.

Of course, it’s well-known that those original ARM-based Surface models floundered, and Windows Phone struggled to find marketshare. But we weren’t giving up on ARM as a viable target for Windows! Also, ARM was busy developing a 64-bit variant with even better alignment to Windows’ requirements, leading to the next chapter in my Microsoft adventure....

ARM Goes 64-Bit

It was back in mid-2011 when we officially learned of ARM’s new AArch64 architecture, a fresh design that took most of what was good about ARM’s 32-bit instruction set, tossed out the rest, and added support for 64-bit registers. Overall, I have to say they did a fantastic job—it’s now become my favorite ISA to write for.

The person I had partnered with to bring up ARM on Windows wanted no part of doing it all over again for 64-bit, so it fell to me to take on the early work of hacking up Windows for yet another new architecture. But first I had to finish up my previous ARM commitments, which was good because we had a hard dependency on the compiler team, who needed time to build up the necessary compiler backends and linker support.

Once we got started, however, things moved pretty quickly. The compiler was in pretty solid shape even in those early days, and soon we had Windows Core (the kernel and a command prompt) booting on ARM’s simulator, just 7 months after we began in earnest.

At that time, there was no actual ARM64 hardware available to run Windows on, so we were stuck struggling with fixing up and debugging things through a slow software simulator that ran between 10–100MHz. Unfortunately, it would be another four months before we got our first shot at real silicon.

Eventually we did get our hands on one of the first physical bits of ARM64 hardware from Applied Micro, and with a bit of work to overcome some interesting surprises (like there being no physical memory at address 0), we were able to get things up and running.

Running on real hardware at decent speeds was a breath of fresh air, and soon other hardware platforms followed. Each of these took some time and effort, but having more hardware available made it possible to scale out the effort of improving the ARM64 port by involving more and more people.

Finally, a bit more than 2.5 years after getting started, all of our ARM64 work was integrated in Window’s main branch. In the meantime, we continued to work on finding and fixing problems, and also figuring out where we would actually first deploy Windows for ARM64 ... would it be on phones? On servers? Or on the desktop?

Cobalt and ARM64

One of the big failings of the Surface RT was that it lost one of Windows’ main advantages: the ability to run legacy applications compiled for Intel platforms. Therefore it was natural to wonder: would it be possible to dynamically translate compiled x86 code into ARM code on the fly? And what kind of performance could we get out of that?

Thus, while we were just getting started working on the port of Windows to ARM64, another team began investigating this question on the existing 32-bit ARM version of Windows, a project codenamed Cobalt.

Now, Windows has long supported running 32-bit programs on 64-bit processors via a system known as WoW64 (Windows on Windows 64), and it had even supported running 32-bit Intel x86 programs on architectures such as Alpha or IA64 that didn’t have any Intel-compatible hardware support for doing so. To do this, it would employ a translation layer, such as the famous FX!32 used in the Alpha port of Windows NT.

So this parallel team needed to not only develop an x86-to-ARM translator, but it also needed to update WoW64 to support running 32-bit Intel x86 programs on another 32-bit hosting architecture (ARM). This latter aspect had not been tried before!

My involvement in this prototype was limited to being an advisor, primarily because we decided that the best starting point for an x86-to-ARM translator would be the source code for the x86-to-PowerPC translator we already had, namely VirtualPC for Macintosh. And, well, I had some experience with that code.

In the end, the prototype demonstrated that things could be done reasonably well, but performance was not great. However, forthcoming ARM64 chips promised to offer significant speed improvements, and so the decision was made to get the prototype up and running on ARM64.

However, all we had at this point was an x86-to-ARM (32-bit) translator, not a native x86-to-ARM64 translator, which would require a substantial rewrite. So as a quick and dirty initial approach, I helped engineer a hybrid solution that would compile x86-to-ARM (32-bit), and then we would switch the processor into 32-bit mode to execute it, and would return to 64-bit mode when finished.

While this worked, it was less than ideal. First, the switch back and forth between 32-bit and 64-bit mode added overhead. Further, 32-bit ARM has only 16 registers (and only 13–14 are really usable for translated code), which introduced a lot of complexity into the translation process, as register usage had to be carefully managed.

On ARM64, however, we had 32 registers and could make the translation simpler by hard-coding much of the register usage in the translated code. I personally thought the hybrid approach was crazy, and it was setting off all of my “this could be done so much better in native code” bells, so I volunteered to create a native x86-to-ARM64 translator.

So I did, and we saw an immediate performance boost as a result. However, working on the translator was not my main job, and so I handed my work back off to the other team who built in lots of optimizations on top of my work to produce the finished result, which is now shipping in real systems (as of 2018).

More jitting

After completing my Cobalt work, I went on a much-needed 3-month sabbatical, where I focused much more on the musical side of things. When I returned, I came back to work on more general OS-level improvements to ARM64, along with helping out efforts to make ARM64 a first-class Windows target architecture.

As we were getting Windows ready to ship on the first ARM64 devices, we ran into an issue with the Edge web browser. We really wanted to ship Edge as 64-bit native, since it ran faster and had better security mitigations. However, Edge relied on the Chakra engine to execute JavaScript code, and nobody had had the time to write a native ARM64 backend.

Thus, the initial plan was to ship the 32-bit version of Edge on these ARM64 devices, running on WoW64, and leveraging the already-extant ARM port of Chakra. This didn’t sit well with me, and after some discussion, we decided that if I could jump in and help bootstrap the Chakra port to ARM64, then maybe we could get something up and running in reasonable time.

So I took a big chunk of the backend code I had written for Cobalt and ported it into the Chakra environment, and then spent several months working with a few of the Chakra developers to wire it all up for ARM64. This was definitely an interesting side project that I was happy to get started, but eventually I had to let it go and head back to my day job.

And I’d tell you about the next chapter in my Microsoft adventure if I could, but unfortunately it’s not public knowledge, so that story will have to wait until another time....